If I’m lucky, the only reason I get a phone call before 7am is because somebody on the east coast forgot about the timezones between them and me. The alternative is almost always bad news.

Today I wasn’t lucky, and neither were a huge number of readers and users at GigaOM who received multiple copies of our daily newsletter. For a news and research organization that values — loves — its users as much as we do at GigaOM, this was all hell breaking loose. Our efforts to address the problem also represent some of the best of what we are and made me proud to be part of the team.

As with most emergencies, details were sketchy at first, and some were hard to spot among the virtual smoke and klaxons. What was clear was that people were getting more email than they expected from us and we had to stop it. I confirmed it was stopped within minutes and our editorial team jumped in to address the issue via Twitter (and, and). As the rest of the team came online we got to work identifying the cause and extent of the problem (engineering), answering every one of the hundreds of emails we received (support and product management), and getting back to doing great reporting (editorial).

First, some background: GigaOM publishes a number of email newsletters. If you subscribe to our daily newsletters you can pick from our eight channels of coverage and we custom build a newsletter just for the specific channels you select. There are up to 256 variations, so we’ve automated the task of iterating through each variation, generating content, and queueing it for delivery to the correct segment of our mail list.

Some of those segments have a lot of subscribers, others have only a few, but something stood out today: the number of recipients for each segment was way, way, WAY larger than it should have been. We poked at the question from a lot of angles, but it kept coming back to a problem with the list segmentation today that didn’t happen yesterday. That became a question for our email service provider. Though there was some initial confusion, MailChimp identified and took ownership of the problem.

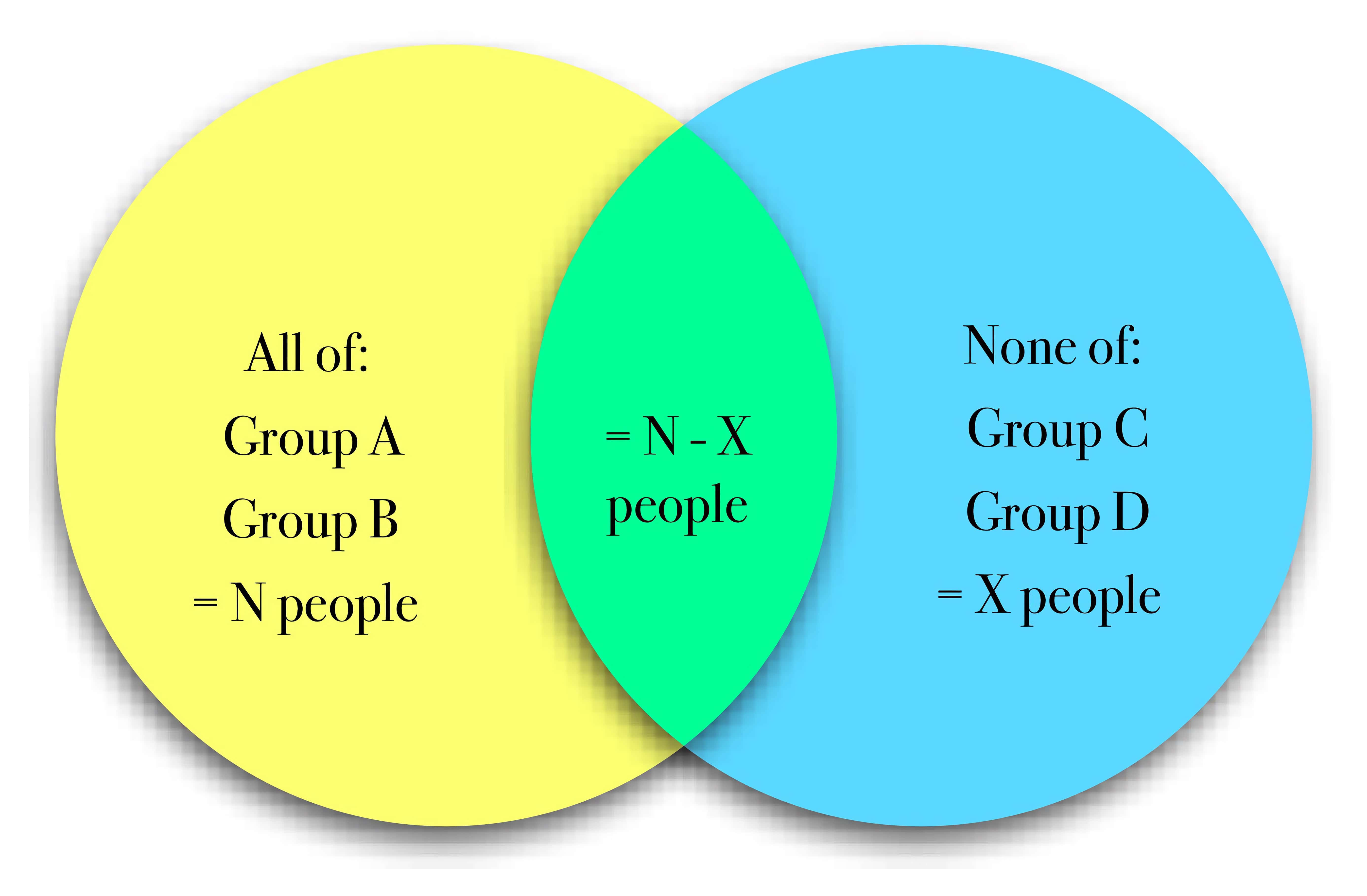

Our editorial channels in MailChimp are represented by groups, and to make the segments work correctly we have to use two matching rules: a positive match that selects all the users subscribing to a set of channels, and a negative match that excludes users subscribed to other channels. That’s what prevents a user who’s subscribed to channels A, B, and C from receiving the newsletter created for just channels A and B. When those rules are properly ANDed everything works.

It might be a little clearer as a venn diagram:

Venn and.

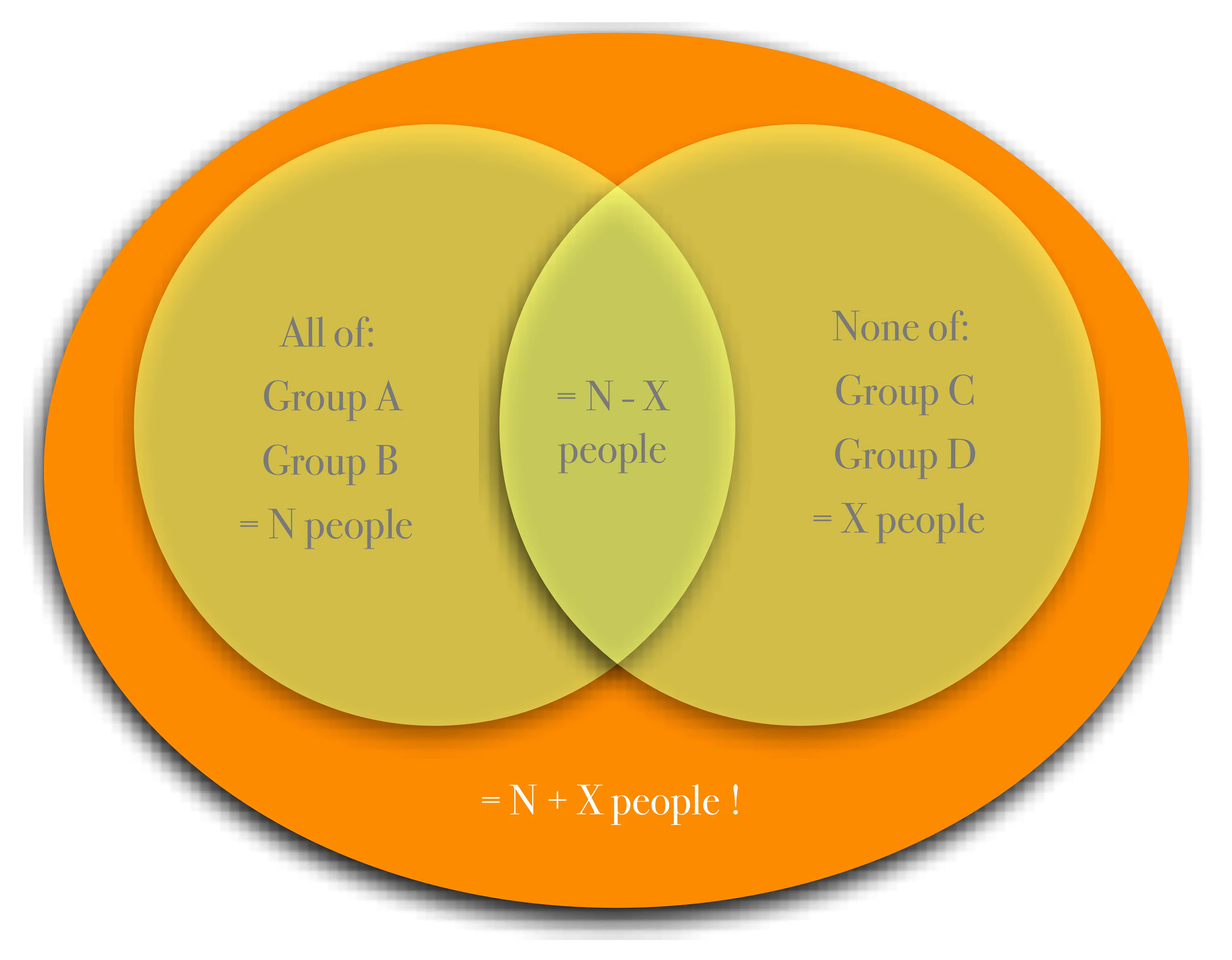

Unfortunately, what I was seeing in the logs looked more like this:

Venn or.

That is, rather than excluding people, the second rule in the segment actually dramatically increased the number of the recipients for each version of the newsletter. Logically, the rules were being ORed, the exact opposite of what we expected and how we’d written the logic. Where we had previously segmented everything to make sure people received only the news they wanted, now we were sending them dozens of newsletters, each slightly different from the other.

Even worse, because our mailing list includes people subscribed to other newsletters or have other relationships with us, the number of people affected was huge. In some cases, people who never subscribed to, and never previously received any of our newsletters face an inbox with more than 40 of them.

What went right, or at least partially right

Our script starts processing newsletters at 6:45AM PDT. MailChimp’s internal monitoring shut down our account at 6:53, just eight minutes later. That’s about when the calls started flooding in to me. That’s good, and faster than I would have been able to stop the list processing myself, but I think there’s room for improvement here. Perhaps a rule that would prevent sending more than one campaign to the same user in any 15 minute period would work. I certainly would appreciate an alarm like that.

I’m also proud of the work our team. We hadn’t trained for this, but we knew what to do. We quickly divided tasks on the engineering side while the rest of the company mobilized to respond to the many people who contacted us. We did a reasonably good job of communicating internally, and I think we learned how to do it better next time.

I don’t know if it’s a sufficient apology for everybody, but our public announcement and personal attention to everybody who contacted us were truly heartfelt, and the additional explanation that came from one of our senior writers in the comments there is incredibly sincere: GigaOM is “the type of business where everyone takes responsibility for delivering a quality product.”

The MailChimp team’s apologies (Twitter and blog), and the postmortem analysis they’ve provided have been great as well. I’m especially interested in the adversarial QA they’ll be doing going forward.