Balancing data and activity between shards

Your consistent hash ring leads to inconsistent performance:

The basic consistent hashing algorithm presents some challenges. First, the random position assignment of each node on the ring leads to non-uniform data and load distribution. Second, the basic algorithm is oblivious to the heterogeneity in the performance of nodes.

From https://www.cs.cornell.edu/projects/ladis2009/papers/lakshman-ladis2009.pdf, which explains that Cassandra addresses that common problem by “analyz[ing] load information on the ring and have lightly loaded nodes move on the ring to alleviate heavily loaded nodes.”

A fundamental problem that confronts [sharded] applications is the efficient location of the node that stores a desired data item.

From https://pdos.csail.mit.edu/papers/ton:chord/paper-ton.pdf, a frequently cited paper which goes on to describe a mechanism that “adapts efficiently as nodes join and leave the system, and can answer queries even if the system is continuously changing.”

Though the Stoica paper actually addresses peer-to-peer applications like Napster and never mentions “sharding,” it introduced some important details for how sharded systems should work and self-healing. Database developers, including those designing Cassandra, took note of those details and added micro-sharding or micro-partitions.

To combat imbalance, many of Google’s systems generate many more partitions than there are machines in the service, then do dynamic assignment and load balancing of these partitions to particular machines. Load balancing is then a matter of moving responsibility for one of these small partitions from one machine to another. With an average of, say, 20 partitions per machine, the system can shed load in roughly 5% increments and in 1/20th the time it would take if the system simply had a one-to-one mapping of partitions to machines. The BigTable distributed-storage system stores data in tablets, with each machine managing between 20 and 1,000 tablets at a time. Failure-recovery speed is also improved through micro-partitioning, since many machines pick up one unit of work when a machine failure occurs. This method of using micro-partitions is similar to the virtual servers notion as described in Stoica and the virtual-processor-partitioning technique in DeWitt et al.

An enhancement of the micro-partitioning scheme is to detect or even predict certain items that are likely to cause load imbalance and create additional replicas of these items. Load-balancing systems can then use the additional replicas to spread the load of these hot micro-partitions across multiple machines without having to actually move micro-partitions. Google’s main Web search system uses this approach, making additional copies of popular and important documents in multiple micro-partitions. At various times in Google’s Web search system’s evolution, it has also created micro-partitions biased toward particular document languages and adjusted replication of these micro-partitions as the mix of query languages changes through the course of a typical day. Query mixes can also change abruptly, as when, say, an Asian data-center outage causes a large fraction of Asian-language queries to be directed to a North American facility, materially changing its workload behavior.

From https://cseweb.ucsd.edu/~gmporter/classes/fa17/cse124/post/schedule/p74-dean.pdf, which also describes the performance and availability advantages of using request replication.

Many shards on a small number of nodes may increase the risk of data loss

The calculation indicates that in order to reduce the probability of data loss, you can reduce the number of partitions or increase the replication factor. Using more replicas costs more, so it’s not ideal for large clusters that are already expensive. However, the number of partitions presents an interesting trade-off. Cassandra originally used one partition per node, but then switched to 256 partitions per node a few years ago in order to achieve better load distribution and more efficient rebalancing. The downside, as we can see from this calculation, is a much higher probability of losing at least one of the partitions.

From https://martin.kleppmann.com/2017/01/26/data-loss-in-large-clusters.html, which suggests that micro-sharding data can have a negative effect on availability (or increase the chance of data loss, as that article is focused on).

Shuffling shards to minimize problems with neighbors

With Shuffle Sharding we can do much better again than traditional sharding. It’s deceptively simple. All we do is that for each customer we assign them to two servers pretty much at random.

So for example, [customer A] is assigned to nodes 1 and 4. Suppose we get a problem request from [customer A]? What happens?

Well … it could take out nodes 1 and 4. So [customer A] is having a bad experience now. Amazing devops teams are on it, etc, but it’s still not great for them. Not much we can do about that. But what about everyone else?

Well, if we look at [customer A]’s neighbors. They’re still fine! As long as their client is fault tolerant, which can be as simple as using retries, they can still get service. [Customer B] gets service from node 2 for example.

From https://twitter.com/colmmacc/status/1034492056968736768, which excitedly points out that shuffle sharding techniques can reduce the extent of failure caused by a bad client from taking down an entire service for all users to taking down the service just for themselves:

O.k. let’s PAUSE for a second and appreciate that. Same number of nodes. Same number of nodes for each customer. Same number of customers. Just by using MATH, we’ve reduced the blast radius to 1 customer! That’s INSANE.

Using short timeouts and repeating requests to reduce p99 latency

Dealing with Slow Processing With Replication

- Replicate Processing

- If a request is slow: Start a new one!!

- New request may run on a machine with no problems

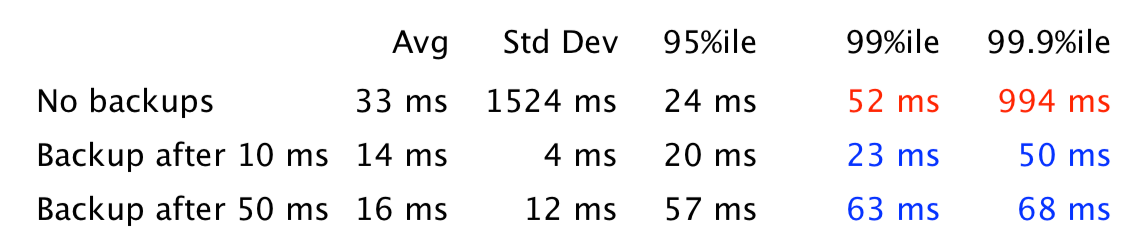

From http://db.cs.duke.edu/courses/fall15/cps214/Day15.pdf, which references https://ai.google/research/pubs/pub44875 and this comparison of request times while waiting for a single critical path request, vs triggering a backup (duplicate request) after 10ms or 50ms:

Backups in this context are duplicate/repeat requests triggered after a short 10ms or 50ms timeout

However, those CS514 class notes do warn: “duplicating the request may not help if the new request uses the same data.” That is, if all the systems that can answer the request have the same underlying performance bottleneck, it won’t help to create multiple requests.